Unwrapping Spotify's Data Stack

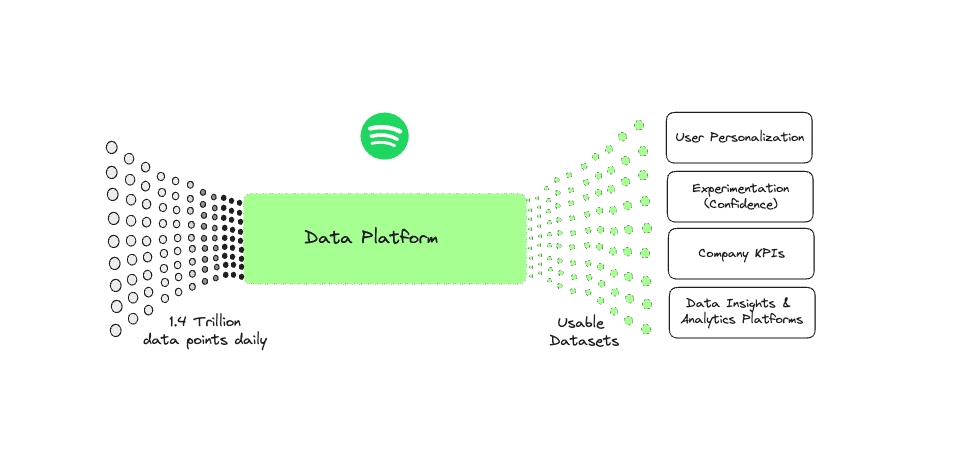

Spotify, one of the world's largest music streaming services, if not the largest and takes pride in managing over 1.4 trillion data points in a day.

Doing so has established them as one of the leaders in the data-driven age. In this blog, we'll uncover Spotify's data stack and understand how exactly the company was able to turn such vast amounts of data points into a viral trend: "Spotify Wrapped".

Data Stack: "Under the Hood"

Before we get to uncovering the powerhouse behind the hood, let's take a look at what their systems are capable of processing.

At the time of writing, Spotify's processing systems are capable of:

- Managing over 700+ million active users globally

- Processing 1.4 trillion events daily spanning over 1,800 different event types that represent the interactions from users across their interfaces

- Storing over 100+ million audio tracks

- Maintaining and orchestrating over 38,000 active data pipelines feeding downstream analytics

- Serving 5,000+ dashboards at any point in time

Core Infrastructure and Architecture

Storage

For application-specific storage, Spotify uses Cassandra DB, a NoSQL database made for high availability and scalability. Cassandra offers high flexibility for Spotify to store user data such as playlists and music libraries.

For big data storage of all the events being processed on Spotify, the company operates the largest Hadoop cluster in Europe. However, storage is also shared with Google's Cloud Storage (GCS) as part of their ongoing efforts to migrate to the cloud. All the data that gets delivered via the event delivery system is written in Avro format and partitioned into hourly buckets.

As for their data warehouse, with their migration to GCP, Spotify makes use of BigQuery as their centralized warehouse for all downstream analytical use cases. They run all SQL-based workflows through DBT and store all the data that is accessed by their dashboards.

Processing

In the year 2016, Spotify made it public that they had migrated from Kafka to GCP's Pub/Sub for its massive event delivery system.

Spotify makes use of a combination of Apache Beam and Flink for processing both their real-time and batch pipelines. Resources available for these are scarce, and hence not much could be inferred.

Management

Data management and data processing are essential for Spotify to effectively manage its extensive data and pipelines. Spotify has built its own in-house tooling for data management as part of their Data Platform that is responsible for data lineage, metadata, access control, and retention policies.

Dashboards

Spotify internally uses both Tableau and Looker for meeting its dashboarding requirements. They also maintain a handy dashboard portal made in-house to make sure every dashboard used by over 6,000 employees is easily accessible via search.

Unwrapping the Tech Behind "Spotify Wrapped"

If you've ever browsed the internet, chances are that you've heard of the famous Spotify Wrapped campaign. It's among the most viral annual marketing campaigns run by the company, wherein they compile each user's year's worth of metrics and insights based on the events being processed on a daily basis. With over 700+ million users receiving a unique compilation of their metrics, the techniques applied behind the scenes to achieve this become a feat in itself.

Wrapped Data Sources

At the time of building the Wrapped summary, Spotify needs to combine several types of data sources such as:

- Streaming activity: which songs played, when, and how much

- User metadata: user ID, subscription tier, region, profile information

- Playback context: device, listening session type, playlist vs album, etc.

Performing a typical big data join with a scale of data that consists of millions of users and billions of events could be prohibitively expensive. Hence, the teams at Spotify made use of some clever optimization techniques to reduce the cost by over 50%.

Sort Merge Bucket (SMB)

A typical join on such a scale of distributed data would cause massive amounts of data shuffling, involving heavy disk utilization and network I/O, causing the expenses to rack up. Instead, Spotify uses SMB formats to write data. At the time of consumption from each source, the data is bucketed by hashing the integer value of the record's key (e.g., userId) and performing a modulo operation over the number of buckets. This makes sure the data is bucketed into the required number of groups. The record is also sorted before the write operation.

By doing so, at the time of joining records between different sources (e.g., streaming events + user metadata + context), they only need to perform a merge sort against matching bucket files. This ensures no costly data shuffling takes place in this process, and the data processing with this approach is much more efficient—less disk & network I/O, less resource consumption, and faster processing.

Incremental Aggregation

Once the SMB data process is completed, they are now able to read the data that is keyed by userId from these three data sources and aggregate over the year. To avoid overloading the ETL job by running aggregation over the whole year's worth of streaming events, the team instead runs smaller jobs that aggregate data per week. From there, they then aggregate all the smaller weekly partitions into a single large partition that holds a year's worth of data.

The output is then used to generate each user's Wrapped summary—user's top songs, total minutes, top artists/genres, etc. The pipeline writes results keyed by user_id. These summaries feed the "Wrapped" UI generation and personalization logic.

Wrapping Up

Spotify's data infrastructure is a testament to how thoughtful architecture and clever optimization can handle massive scale efficiently. From SMB joins to incremental aggregation, every decision reflects a deep understanding of distributed systems and cost optimization—all to deliver that personalized Wrapped experience we eagerly await every December.